Live Speech Driven Head-and-Eye Motion Generators

Binh Huy Le, Xiaohan Ma, and Zhigang Deng

IEEE Transactions on Visualization and Computer Graphics (TVCG) 18(11), 2012

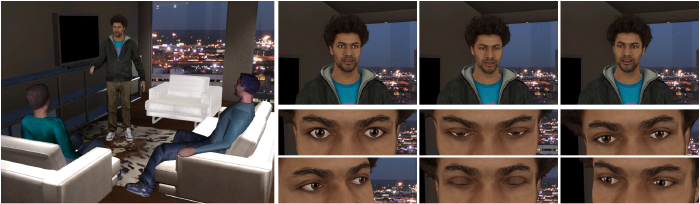

Abstract: This paper describes a fully automated framework to generate realistic head motion, eye gaze, and eyelid motion simultaneously based on live (or recorded) speech input. Its central idea is to learn separate yet inter-related statistical models for each component (head motion, gaze, or eyelid motion) from a pre-recorded facial motion dataset: i) Gaussian Mixture Models and gradient descent optimization algorithm are employed to generate head motion from speech features; ii) Nonlinear Dynamic Canonical Correlation Analysis model is used to synthesize eye gaze from head motion and speech features, and iii) nonnegative linear regression is used to model voluntary eye lid motion and log-normal distribution is used to describe involuntary eye blinks. Several user studies are conducted to evaluate the effectiveness of the proposed speech-driven head and eye motion generator using the well-established paired comparison methodology. Our evaluation results clearly show that this approach can significantly outperform the state-of-the-art head and eye motion generation algorithms. In addition, a novel mocap+video hybrid data acquisition technique is introduced to record high-fidelity head movement, eye gaze, and eyelid motion simultaneously.

Download: [paper] [video] [Errata Correction and Appendix for COA Calculation]

Video on Youtube

Images

|

||

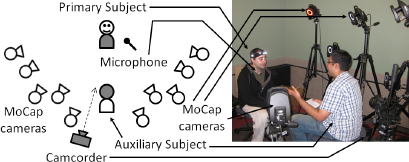

| We developed a hybrid data acquisition system to simultaneously capture head movement, eye gaze, and eyelid motion. Our system consists of a Canon EOS t2i camera, calibrated and synchronized with the VICON motion capture system. Left: Illustration of the data acquisition setup. Right: A snapshot of the data acquisition process. | Top left: The used camera calibration pattern. Top right: The used marker layout in the subject calibration step. Bottom: Examples of eye tracking results. Green dots indicate the eye probe regions and blue dots indicate the pupils. |

Bibtex

@article{BinhLe:TVCG:2012,

author = {Binh H. Le and Xiaohan Ma and Zhigang Deng},

title = {Live Speech Driven Head-and-Eye Motion Generators},

journal ={IEEE Transactions on Visualization and Computer Graphics},

volume = {18},

issn = {1077-2626},

year = {2012},

pages = {1902-1914},

doi = {http://doi.ieeecomputersociety.org/10.1109/TVCG.2012.74},

publisher = {IEEE Computer Society},

address = {Los Alamitos, CA, USA},

}